A Framework for Student Success Analytics

Introduction

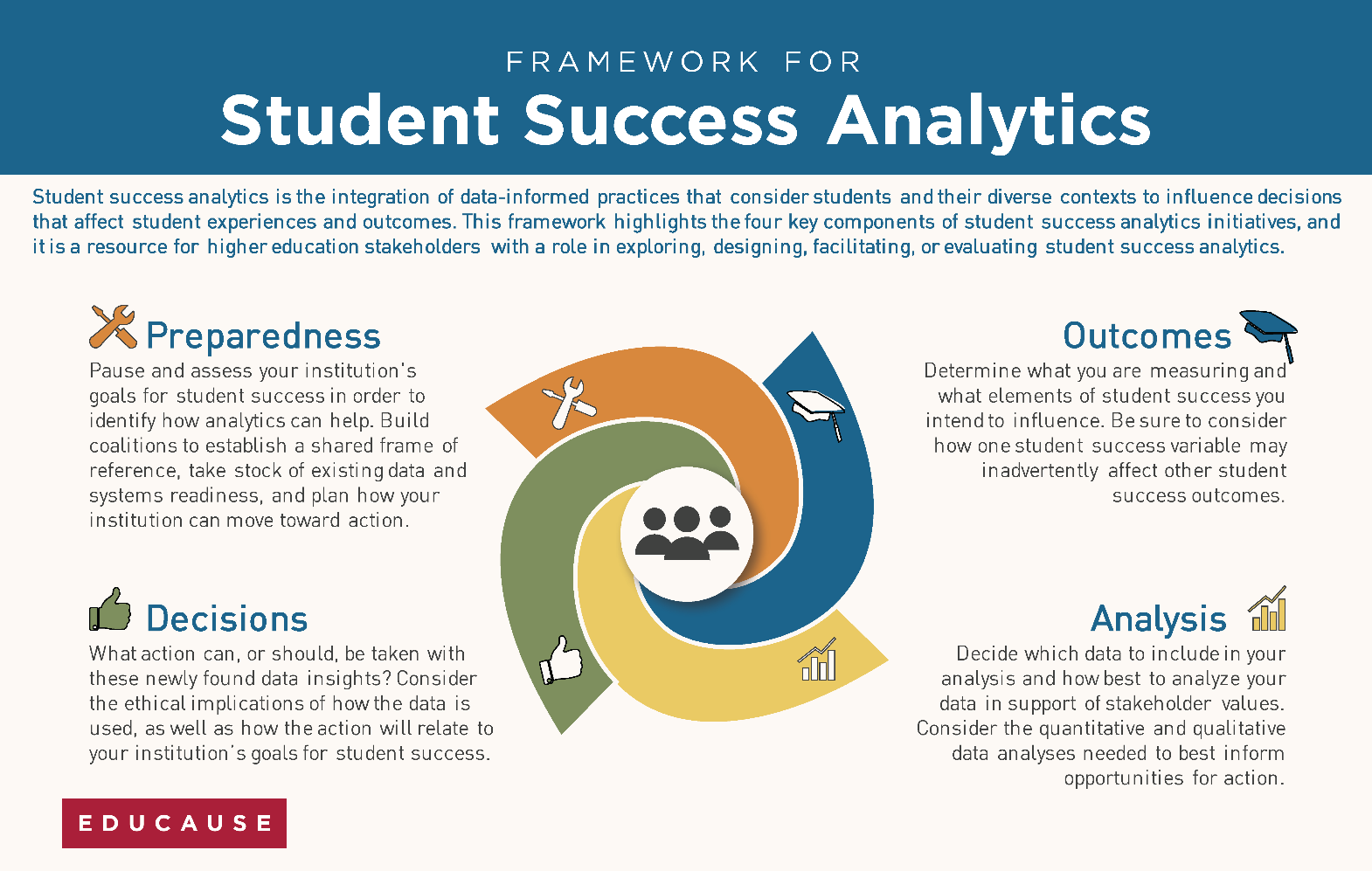

Student success analytics is the integration of data-informed practices that consider students and their diverse contexts to influence decisions that affect student experiences and outcomes. Whether your institution is already under way with student success analytics initiatives or just beginning to evaluate the use of data to inform practice, a framework can help stakeholders pause, assess, and plan.

The purpose of this framework is to introduce users to the central components of a student success analytics initiative, providing a shared point of reference for institutional stakeholders involved. The four central components described here—Preparedness, Outcomes, Analysis, and Decisions—are interdependent and should be approached as equally important areas for consideration and planning for any student success analytics initiative. The following framework can be used by any higher education staff member, faculty member, or administrator with a role in implementing initiatives that impact student outcomes.

In 2023, a working group developed a rubric to support the use of this framework. Read this case study in EDUCAUSE Review to find out how UC Irvine implemented the rubric.

An overview of this framework is shown in Figure 1.

Preparedness

Though each student success analytics project may require unique preparation, multiple factors and considerations can help an institution maximize its opportunity to facilitate an effective, data-informed initiative. Let these considerations and markers of readiness guide your preparation as you undertake a new project or continue with an existing one.

Orient to Your Mission

As you set out to develop a student success analytics initiative, your institution's mission provides a critical framing device for developing questions to guide your work. To begin, ask, "What does student success mean at our institution?" Depending on institutional mission, context, strategic priorities, and many other factors, student success could encompass a variety of meanings, such as any of the following:

- Student retention and persistence outcomes

- Bridges to meaningful employment in students' chosen fields

- Wellness supported by capacity of campus health resources

- Meaningful connections between students and their faculty and staff

- Achievement of new competencies or learning objectives using innovative teaching modalities

Understanding the meaning of student success in relation to your institution's priorities can help align your project with your organization's broader goals, regardless of the level at which you work or at which your analytics project is taking place. A student success analytics planning process, therefore, will generally begin with the identification of goals for student success that can be supported by an analytics capacity. What are your institution's goals for students, and how can analytics help?

Build a Coalition

Typically no one individual or department head "owns" all student analytics on campus or manages all the people, processes, and technology required to act on student data. Therefore, it is important to consider whether you have the right partnerships in place for success.

John Kotter's strategies for leading change cite the impact of forming a guiding coalition to drive and sustain institutional change.Footnote1 Effective student success programs may need coordination between areas such as enrollment management, schools and colleges, information technology, institutional research, academic administration, student affairs, and faculty affairs. Do you have the cross-functional support that data-informed decisions require? Do you have the support of key leadership? The objective in evaluating your coalition is not to get bogged down by the many voices but to thoughtfully consider the partnerships that will enable your project to be successful. Conducting this informal evaluation and establishing partnerships will allow you to introduce colleagues to the goals of the effort and the benefits of implementing the initiative effectively, ultimately allowing you to get key buy-in from the start.

Establish a Shared Frame of Reference across Silos

One consideration in determining your readiness to implement an initiative is to assess whether a shared frame of reference exists for understanding student success across the different organizations involved. Student success analytics initiatives can be complex, creating new processes that previously did not exist, often involving coordination across multiple groups. When each group has a different idea of what student success means to them, communication and collaboration about analytics can become challenging.

A shared reference can be accomplished by ensuring that stakeholders easily understand and are able to describe the vision of student success. Through clear core values, institutional stakeholders will be equipped to communicate this vision across various constituents, further instilling the sense of urgency and importance that will ultimately sustain the shift in using student success analytics and data in all decisions.

Assess Data and Systems Readiness

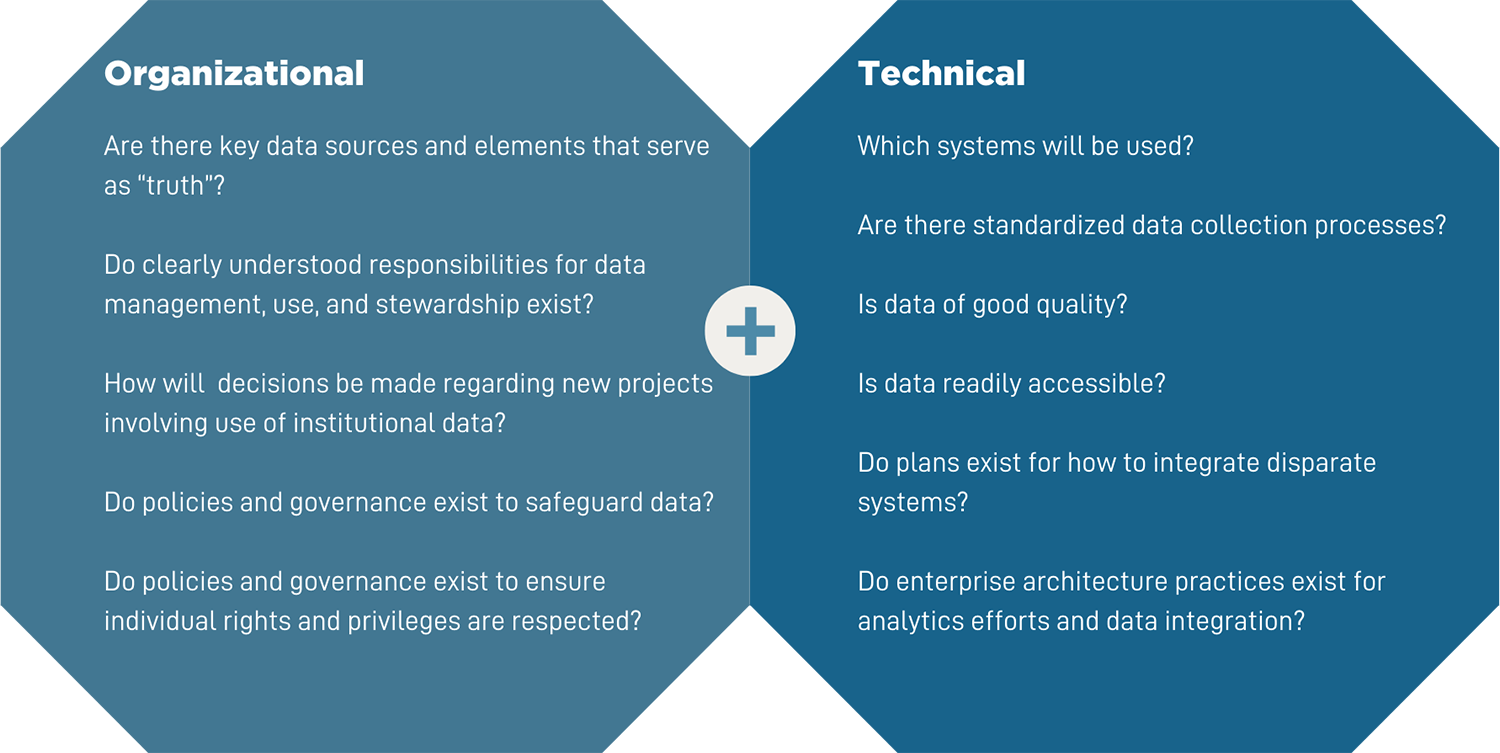

Organizational and technical dimensions of readiness are important to consider when undertaking an analytics initiative. Organizational readiness refers to considerations related to governance, culture, and policies. Technical readiness examines the maturity of existing systems and processes in order to support plans for building additional capacity if needed. See Figure 2 for examples.

An informal assessment of organizational and technical readiness can provide space to thoughtfully consider possible pitfalls and identify proactive ways to address them. As you consider such an assessment at your institution, you may find it worthwhile to lead a series of working sessions to help evaluate and discuss each area. Forming a cohort through this experience may allow the group to communicate and help each other at different levels. Tools such as the EDUCAUSE Analytics Institutional Self-Assessment provide resources beneficial for ensuring a common baseline.

Promote Data and Analytics Literacy

When new analytics initiatives are introduced, stakeholder uptake and use of data should be a major area of focus. As described by Taylor Swaak, data-defining governing boards, data-literacy training, and transparency should be at the forefront of initiatives and situated within a campus culture that is supportive of data use.Footnote2 The absence of these factors can cause or increase the risk of data misinterpretation and misuse, ultimately doing more harm than good to students.

Especially in student success analytics, which is by definition action oriented, many barriers may impede the effective integration of new analytical products into existing campus practices around student success. A goal of our work should be to support and inform campus stakeholders' decision-making rather than to supplant it. Depending on the analytics project and product, stakeholders may include advising staff, deans and academic leadership, faculty, student experience support staff, campus health professionals, or the students themselves. Each of these broad groups will contain a multitude of cultures around the perception and interpretation of evidence.

For any analytics project, the trick is to find the right balance between negotiating stakeholder meaning-making and ensuring rigorous, responsible data interpretation. As part and parcel of the coalition-building undertaken when developing any student success analytics product, care should be taken to collect examples of stakeholder meaning-making and interpretation of evidence. For example, what benchmarks does a stakeholder typically find most insightful in their understanding of student success? How does that stakeholder interpret those benchmarks, and what assumptions exist? Data and analytics produced can then be framed appropriately to facilitate direct incorporation into stakeholder paradigms. At the same time, the insights presented from our projects can be awe inspiring, mysterious, or downright intimidating to stakeholders and often cannot be made entirely transparent in terms of source or methodology. The strong collaborative relationship with stakeholders that facilitated the development of the analytics product should therefore be leveraged to educate stakeholders on appropriate uses and interpretations of the analytics product, even in the early stages of product design.

Look for the Ability to Move toward Action

Often, student success analytics focuses on data—collection, management, access, visualization, and more. Equally important, however, is the ability to operationalize and act on the data. The coordination and prioritization of data, performing data-informed interventions, and measuring the impact of student success analytics may also require technology, people, and process. After you collect data, will you have the ability to act on it? Can analytics be used to help prioritize or improve existing interventions?

Building a practice of student success analytics on campus is an iterative process. Be prepared to try different approaches and learn from your successes and failures. By allowing yourself the time to understand your readiness and being open to the journey, you will be well positioned to achieve your goals. Changes made today may not immediately result in measurable strides, but with continual improvement and longer measurement time horizons, future practice will look and feel easier than before.

Outcomes

A student success analytics initiative is designed to evaluate and enhance student experiences and outcomes. By involving all stakeholders in determining the most valued factors, and keeping in mind the multidimensional nature of student success, you can design an initiative that will most effectively inform purposeful action.

Multidimensional Nature of Success

A defining feature of student success analytics is the consideration of students' diverse contexts. No one factor in a student's life, and no one measure of that student's outcomes, can paint a comprehensive picture of a student's situation and needs with regard to student success. Grades or graduation rates, for instance, are often too narrow to be interpreted as single measures of student success for the purposes of an analytics initiative.Footnote3 Rather, analytics initiatives should be designed with the multidimensional nature of student success in mind.

To provide a robust assessment of student success, measurement should include multiple variables from each key area in the spectrum of success components.Footnote4

- Academic achievement

- Attainment of learning objectives

- Acquisition of skills and competencies

- Student satisfaction

- Persistence

- Career success

- Student wellness

Your analysis of data should also consider the interrelated nature of the dimensions of student success. For example, the intent of learning objectives is to describe the specific elements within a competency that a student will attain. The achievement of learning objectives may be assessed by a student's passing grade on an assignment (a metric), but it also may be ultimately assessed through the achievement of graduation (a separate metric). Almost any individual metric has an inherent relationship with another one, and those relationships should be considered in the course of decision-making that impacts student outcomes.

Stakeholder Lenses

Stakeholders can be classified into more granular categories of personas to understand the factors in which they are primarily involved (see table 1). The persona of the student, for example, has "five senses of student success" according to Alf Lizzio.Footnote5 These senses help us understand the unique perspective of this persona. The senses are sense of connectedness (relationships with peers and instructors), sense of capability (clarity, self-efficacy, competence, participation), sense of purpose (relevance, aspiration, direction, motivation), sense of resourcefulness (navigation, safety, balance), and sense of culture (academic culture, collegiality, integrity). By understanding the senses of student success through the perspective of this persona, analytics practitioners may be better equipped to consider factors such as equity in academic activities, inclusive experience, and accessibility as parts of student success.

While personas provide representation for differing stakeholder collectives, analytics developers must also account for the value variances of student success factors by individuals. Each dimension of student success may have a varying level of value from the perspectives of different stakeholders. Consider an individual student, for example, who may value desired job attainment (a measure of career success) as the most important indicator of success. One of the student's instructors, meanwhile, most values attainment of the learning objectives. An administrator most values the student's graduation (a metric of persistence), and the student's employer most values specific skills and competencies. Moreover, this student may initially focus on job attainment but then shift to the perspective that the most important outcome is actually the acquisition of skills and competencies. Thus, using the lens of various personas and the lived experience of individuals is necessary to capture the breadth of needs.

With diverse stakeholders and with values that shift over time, an essential part of developing student success analytics is to regularly co-determine the priority analytics and resource allocation in a transparent, ethical manner. Students (via representation as a collective body as well as diverse individuals), institutional delegates, and external stakeholders can all provide valuable insight as to the factors and outcomes that are relevant and necessary within the context of your institution's mission and your analytics goals.

| Personas | Factors and Metrics for Student Success |

|---|---|

| Administrator |

Academic achievement (GPA), persistence (graduation rate), enrollment/retention, equity (anonymous campus survey, campus climate), accessibility (universal design learning scores in student's courses, count of internal & external complaints logged, audits), engagement (campus activities, community), alumni giving and participation |

| Instructor |

Course objectives, program objectives, course evaluations, engagement (course page views, ebook reading times, participation), academic achievement (assessment grades, course grade) |

| Student |

Acquisition of knowledge and skills, career success (attainment, retention, promotion, salary/financial well-being), personal satisfaction (including engagement, belonging), holistic wellness, inclusive experience |

| External Stakeholders |

Accreditation agency: status Media coverage: school ratings/rankings Employers: workforce preparedness |

Analysis

Various types of analytics can be used in the design of a student success analytics initiative, each of which lends to different forms of analysis. By considering what type of analytics best aligns with your project goals, you can develop an initiative in which your analysis informs opportunities for action.

Institutions use four well-known approaches to analytics to make informed decisions around student success: descriptive, diagnostic, predictive, and prescriptive. A student success analytics initiative may entail any one of those four analytics types while simultaneously focusing on educational analytics that falls in the category of institutional, learning, or academic analytics.

Types of Analytics

The preparedness of the institution and the project goal will impact the type of analytics used in a student success analytics initiative. Typically, historical data is used for all of these types of analytics. Table 2 provides an overview of each type of analytics.

| Type of Analytics | How It Is Used | Sample Questions It May Answer |

|---|---|---|

| Descriptive |

|

What are my top barrier courses to persistence into the next semester? What sequence of courses is detrimental to student persistence? |

| Diagnostic |

|

Why are students in ___ course dropping from our institution at a higher rate than students in other classes? Why are students in the ___ major failing chemistry at a higher rate? |

| Predictive |

|

What is the probability of hitting our desired enrollment trend? What is the probability that this student will be retained? What are the three top contexts that are impacting student probability of persisting? |

| Prescriptive |

|

How can we restructure the sequence of courses to promote student success in biology courses? How can we organize students into subpopulations to better offer campus resources? How does the trend of debt that students have at graduation differ across the student body? |

Regardless of analytics type, the first step in analysis is to validate data, identify anomalies, and address those adjustments that need to be made. Having data elements that are validated and defined is critical before moving forward in an analytics project. Once this preparation is complete, trends in the data can be reviewed, and you can begin to determine where underlying issues might impact the outcome you are seeking to improve.

Quantitative and Qualitative Data

In any research initiative, the two types of data that can be collected are quantitative and qualitative. Table 3 describes each type of data.

| Type of Data | Description | Examples |

|---|---|---|

| Quantitative Data |

|

|

| Qualitative Data |

|

|

The intersection and effective utilization of qualitative and quantitative data within research, known as mixed methods, is a strategy that can be used not only to understand a phenomenon but to gain perspective on how to approach the issue. End-of-term student course evaluations, for example, commonly consist of quantitative elements (such as selection from a 1–5 scale) and qualitative comments (text entry). The advantage of including qualitative data in analysis is that the data methodically reveals in-depth insights into a phenomenon or reveals biases that you may not initially notice.

In the case of student success analytics, a thorough investigation into a problem using mixed methods can reveal solutions or strategies not otherwise gained through the use of a singular data analysis solution. In practice, for example, mixed methods may involve a complementary use of predictive analytics and focus group interviews with students and stakeholders. This approach can help an institution gain an understanding of the potential severity of a problem (indicated by predictive data), while focus groups and interviews provide contextual nuance that can illuminate a solution, validate the predictive data, or indicate where algorithm refinement is warranted.

The field of artificial intelligence (AI), and more specifically machine learning with natural language processing, is being explored to automate the coding of large qualitative data sets. AI can process, for example, comments from course evaluations or transcripts of communications between students and advisors. This method of processing could help more efficiently identify and quantify findings that can be used in dashboards or nudge communications.Footnote6

Types of Education Analytics

Student success analytics holistically integrates several conventional areas within education analytics for the purposes of positively impacting student experiences and outcomes. These include institutional analytics, learning analytics, and academic analytics. Each of these types of analytics is multidimensional and unique, and they overlap in a variety of ways. Table 4 describes the primary types of education analytics that can be used for student success analytics. Each of these analytics has tremendous value, but they can also be incomplete, contain implicit bias, or provide a misleading view of your students' experiences. Developing awareness of these potential shortcomings is an essential part of the process.

| Type | Uses | Examples | Typical Data Location |

|---|---|---|---|

| Institutional Analytics |

Describes students and their outcomes at the macro/institutional level Used for the purposes of understanding institution-level outcomes and trends |

Retention and graduation rates; application data; demographics including race, ethnicity, and socioeconomic status; student engagement records; alumni and employer data |

Student information systems, institutional data warehouses |

| Learning Analytics |

Focuses on the learner rather than the institutional outcome Used for understanding and optimizing learning and the environments in which learning occursFootnote7 |

Course-level data, including course performance such as grades, but more granular than typical academic measures |

Learning management system, other technology platforms, institutional data warehouses |

| Academic Analytics |

Describes academic outcomes within an institution Used for the purposes of optimizing academic offerings and programs, with a focus that is less granular than learning analytics |

Grades, major, student characteristics, and admission/prior learning information |

Student information systems |

The design of a student success analytics initiative warrants thoughtful consideration of the combination of types of analytics, data, and data elements that will most effectively address the project's questions and goals. Any combination of these factors may inform opportunities for action to positively impact student experiences and outcomes. Any number of these factors in combination may also inadvertently harm an individual or group of students. It is important to be mindful of potential biases embedded within AI solutions as well as how they are embedded along the continuum of data collection through analysis, interpretation, and action. Careful consideration of strategies to mitigate unintended consequences from data analytics for student success should be folded into institutional processes.

Decisions

A hallmark of student success analytics is its action-oriented nature. When you collect, synthesize, analyze, and socialize data, the ultimate goal is to inform decision-making for the purpose of impacting student experiences and outcomes, which invariably means deciding to act (or not). This action-oriented approach focuses the principles of research in ways that are agile and achievable.

A key consideration when identifying opportunities for action from a student success analytics initiative is that decisions should be data-informed rather than data-driven. Student success is highly complex and idiosyncratic to each student; the human element, therefore, must always be considered in decision-making. We must use data to inform our decisions by also considering that which isn't reflected in our data stream. Data alone should not drive decisions.

When determining opportunities for action, be cognizant of inherent biases in the analysis you are performing, as well as the biases that may be embedded into the architecture and design of the data-collection processes. Practitioners may consider the principles of the Asilomar Convention, a resource for decisions about data use and knowledge sharing in higher education,Footnote8 or the Global Guidelines for Ethics in Learning Analytics.Footnote9 From collection through to action, the following ethical principles should be considered to ensure your student success efforts do truly contribute to student success.

- Do No Harm: To ensure that decisions based on analytics do not inadvertently harm students, thoughtfully consider any areas that are prone to missteps. Keep in mind that not using analytics for fear of causing harm (either to the institution or the students) is not a viable option. For instance, choosing not to intervene or share an identified risk indicator with students, for fear of harming their autonomy, deprives students of an opportunity to make data-informed decisions for themselves.

- Respect for Students: Transparency around data use and analytics is a foundational step toward demonstrating respect and building trust among students and other stakeholders. Without the trust of students and colleagues, your ability to capitalize on data to help students achieve their goals will be constrained.

- Accountability: As your institution's data capabilities expand, so does the need for accountability measures that aid in the ethical assessment of tools, strategies, and actions. Accountability structures should include the voices of various stakeholders—students, staff, faculty, and administrators. Multiple interests are likely to compete for the same resources, so an authoritative body may be needed to advocate for service to all students. Consider forming, for example, a student success analytics group on campus that rotates key data stakeholders each academic year based on a new key challenge. The creation of a team approach can help to break down data silos and build trust.

- Autonomy, Privacy, and Equity: The goal in an analytics initiative is to leverage data to help students achieve success while also respecting their personal autonomy, protecting their privacy, and ensuring equity. Consider analyzing your analytics strategy for threats to autonomy, privacy, and equity.Explore alternate strategies, if needed, while correcting the processes that put students' success at risk.

Using student success analytics to change institutional policies and practice and to empower students in their learning remains central to the promise of diversity, equity, and inclusion (DEI) in higher education. When using analytics to inform DEI initiatives for student success, disaggregation is essential to identify patterns and address gaps. Disaggregation is not without its own inherent risks, however. For example, the sum of descriptive variables can inadvertently identify students in disaggregated data. Using data for predictive analytics that identify achievement gaps by student groups, meanwhile, can result in stakeholders placing the onus of change squarely on the shoulders of vulnerable populations. Faculty, staff, and administrators need to be vigilant that change initiatives don't solely identify groups of students as unprepared but, rather, consider the changes the institution can make to mitigate risk factors. The EDUCAUSE Community Conversations podcast episode "Addressing DEI Issues through Analytics" offers valuable suggestions on the do's and don'ts of analytics with vulnerable populations.Footnote10

Conclusion

When developing action plan steps informed by a student success analytics initiative, practitioners should pursue opportunities both to enact in the immediate future (e.g., rewriting syllabi for an inclusive tone) and that will take place over a longer time period (e.g., redesigning curriculum). Institutions commonly use multiple measures to determine outcomes without taking action to "close the loop" and enact change, yet data and student success do not cross the threshold into student success analytics unless and until there is a direct and imminent intent to take action and monitor progress.Footnote11

In the same way that educators often encourage students to adopt a growth mindset, we must be willing to accept the risk of failure, learn from mistakes or poor decisions, and apply that feedback toward refining our analytics practices. All decisions must be made with the intent to do good, to do no harm, and to improve student outcomes, but sometimes the decisions we make on the basis of limited data will not have the intended result. Accordingly, we need to iterate and strive to continuously improve. There is a cyclical nature to all that we do, those whom we serve, and the ways in which our data practices inform our service to students and their success.

Authors

Tasha Almond-Dannenbring

Sr. Data Analyst, HelioCampus

Melinda Easter

Sr. Project Manager and Service Manager for Student Success Analytics, University of Colorado Boulder

Linda Feng

Principal Software Architect, Unicon, Inc.

Maureen A. Guarcello

Research, Analytics, and Strategic Communications Specialist, San Diego State University

Marcia Ham

Learning Analytics Consultant, The Ohio State University

Szymon Machajewski

Assistant Director Learning Technologies & Instructional Innovation, University of Illinois at Chicago

Heather Maness

Assistant Director, Learning Analytics and Assessment, University of Florida Information Technology

Andy Miller

Principal Educational Consultant - Analytics, Anthology

Shannon Mooney

Senior Data Scientist, Learning and Education, Georgetown University

Ayla Moore

Instructional Designer, Fort Lewis College

EDUCAUSE Staff

Emily Kendall

Communities Program Manager

Notes

- John P. Kotter, Accelerate: Building Strategic Agility for a Faster-Moving World (Boston: Harvard Business Press Books, 2014). Jump back to footnote 1 in the text.

- Taylor Swaak, "The Puzzle of Student Data," Chronicle of Higher Education, 2022. Jump back to footnote 2 in the text.

- Travis York, Charles E. Gibson III, and Susan Rankin, "Defining and Measuring Academic

Success," Practical Assessment, Research & Evaluation 20, no. 5 (March 2015). Jump back to footnote 3 in the text. - Ibid.; Amelia Parnell, Darlena Jones, Alexis Wesaw, and D. Christopher Brooks, "Institutions' Use of Data and Analytics for Student Success: Results from a National Landscape Analysis," Washington, DC: NASPA – Student Affairs Administrators in Higher Education, the Association for Institutional Research, and EDUCAUSE (2018). Jump back to footnote 4 in the text.

- Alf Lizzio, "Designing an Orientation and Transition Strategy for Commencing Students: Applying the Five Senses Model," Griffith University, 2006. Jump back to footnote 5 in the text.

- Walaa Medhat, Ahmed Hassan, and Hoda Korashy, "Sentiment Analysis Algorithms and Applications: A Survey," Ain Shams Engineering Journal 5, no. 4 (December 2014): 1093–113. Jump back to footnote 6 in the text.

- First International Conference of Learning Analytics & Knowledge, Banff, Alberta, February 2011. Jump back to footnote 7 in the text.

- Justin Reich, "The Asilomar Convention: Revisiting Research Ethics and Learning Science," Education Week, Jun 20, 2014. Jump back to footnote 8 in the text.

- Sharon Slade and Alan Tait, "Global Guidelines: Ethics in Learning Analytics," International Council for Open and Distance Education, March 2019. Jump back to footnote 9 in the text.

- Kim Arnold, Jonathan Gagliardi, Wendy Puquirre, and John O'Brien, "Addressing DEI Issues through Analytics," February 16, 2022, in EDUCAUSE Community Conversations, podcast, audio, 2:4 Jump back to footnote 10 in the text.

- George D. Kuh, Foreword to "From Gathering to Using Assessment Results: Lessons from the Wabash National Study," by Charles Blaich and Kathleen Wise (Urbana, IL: National Institute for Learning Outcomes Assessment Occasional Paper No. 8 , 2011). Jump back to footnote 11 in the text.

© EDUCAUSE 2022